When it comes to measuring various physical quantities, meters are indispensable tools. From household electricity consumption to complex industrial processes, accurate measurement is crucial. Historically, analogue meters dominated the landscape, but with technological advancements, digital meters have emerged as a formidable alternative. Understanding the fundamental differences, advantages, and disadvantages of each type is essential for making informed decisions about their application. This cornerstone content outline will delve into a detailed comparison, providing the keywords and concepts necessary for a thorough exploration.

- Measurement and Metering

- Research and Development:

- Defining “Meter”

- Temperature: A Fundamental Physical Quantity

- Mechanical Properties:

- Humidity: Measured by a hygrometer

- Brief Historical Context of Metering Technology

- Analog Meters: The Traditional Approach

- Advantages of Analog Meters:

- Disadvantages of Analog Meters:

- Digital Meters: The Modern Evolution

- Advantages of Digital Meters:

- Disadvantages of Digital Meters:

- Accuracy and Precision:

- Functionality and Versatility: Adapting to Diverse Needs

- Power Requirements: Sustaining Operation

- Data Management and Integration: Leveraging Information

Measurement and Metering

The Importance of Accurate Measurement

Accurate measurement is a cornerstone of success and progress across numerous fields. The importance of this topic can be divided into several key areas:

Quality Control: In manufacturing and production, precise measurements ensure that products meet specified standards and tolerances. This is crucial for maintaining product consistency, preventing defects, and ultimately delivering high-quality goods to consumers. Without accurate measurement, variations in product dimensions, weight, or composition could lead to malfunctions, customer dissatisfaction, and costly recalls.

Safety: In fields ranging from engineering to medicine, accurate measurement is paramount for ensuring safety. In construction, accurate measurements are crucial for structural integrity, helping to prevent collapses and ensuring occupant safety. In healthcare, accurate dosages of medication, precise surgical cuts, and reliable diagnostic measurements are essential to patient well-being and to prevent adverse outcomes.

Efficiency: Accurate measurement directly contributes to operational efficiency. By minimising waste of materials, energy, and time, precise measurements optimise resource utilisation. This involves using correct raw material amounts, optimizing fuel, or streamlining workflows through accurate timing. Inaccurate measurements can lead to rework, scrap, and delays, which reduce efficiency and increase costs.

Research and Development:

At the forefront of innovation, accurate measurement is indispensable for scientific research and technological development. Scientists rely on precise measurements to conduct experiments, analyse data, and validate theories. Engineers use accurate measurements to design, test, and refine new technologies. Without reliable data derived from accurate measurements. The advancement of knowledge and the creation of new solutions would be severely hampered.

Compliance and Regulations: Many industries are subject to stringent regulations and standards that necessitate accurate measurement. This can include environmental regulations that require precise monitoring of emissions, trade regulations that demand accurate product labelling and weight, or health and safety regulations that specify acceptable levels of contaminants. Adhering to these regulations through accurate measurement is crucial for legal compliance, avoiding penalties, and maintaining public trust.

In essence, accurate measurement is not merely a technical detail; it is a fundamental principle that underpins quality, safety, efficiency, innovation, and regulatory compliance across virtually every sector of human endeavour. Its impact is far-reaching, influencing everything from the products we use daily to the groundbreaking scientific discoveries that shape our future.

Defining “Meter”

Measurement devices are instrumental in various fields, meticulously quantifying physical properties such as voltage, current, temperature, pressure, and time. These devices are sophisticated tools, each designed with specific functionalities to provide accurate and reliable data.

At the core of any measurement device are its fundamental components:

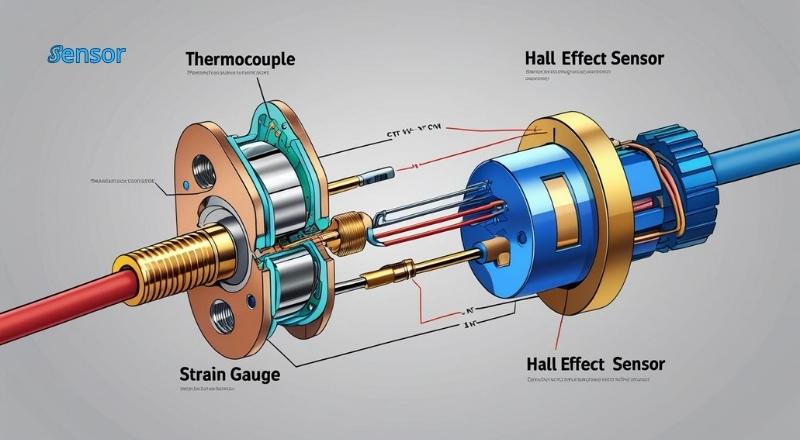

Sensor: This is the fundamental component responsible for sensing and transforming a quantity into a quantifiable signal, typically electrical. The type of sensor used is dependent on the physical property being measured. A thermocouple is an essential tool for measuring temperature, a strain gauge is crucial for pressure measurement, and a Hall effect sensor accurately measures current.

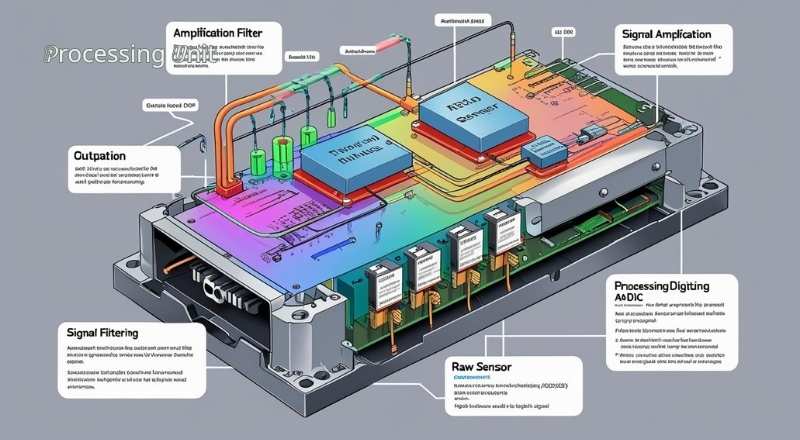

Processing Unit: Once the sensor converts the physical property into a signal, the processing unit takes over. This unit generally comprises amplification, filtering, and analogue-to-digital conversion (ADC) stages. Raw sensor signals are processed (noise removed, weak signals amplified) then digitized for analysis and display. Modern processing units often incorporate microcontrollers or digital signal processors (DSPs) to perform complex calculations, linearisation, and calibration.

Display: The display provides a visual representation of the measured value. This can range from simple analog meters with a needle and scale to advanced digital screens showing numerical readouts, graphs, and trends. The display should be clear, easy to read, and provide the necessary resolution for the application. Some advanced displays also offer touch interfaces for user interaction and data analysis.

These components work in unison to ensure precise and consistent measurements, which are crucial for research, industrial processes, quality control, and everyday applications. The accuracy and reliability of these devices are paramount, as errors in measurement can lead to significant consequences in various domains.

Electrical Properties and Their Measurement

In electronics and electrical engineering, a fundamental understanding of electrical properties and their measurement is essential. Each property describes a different aspect of electricity and requires a specific instrument for accurate measurement.

Voltage:

Voltage, or electrical potential difference, is the force that propels electrons through a circuit. It represents the potential energy possessed per unit of charge.

- Measurement: Voltage is measured using a voltmeter.

- Unit: Voltage is quantified in volts (V), which serves as its standard unit of measurement.

- Description: To measure voltage, a voltmeter is placed in parallel with the component or circuit. This ensures that the meter itself does not significantly alter the circuit’s operation.

Current:

Current is the rate of flow of electric charge (electrons) past a given point in a conductor. It represents the quantity of charge moving over time.

- Measurement: Current is measured using an ammeter.

- Unit: The ampere (A), often shortened to “amp”, serves as the fundamental unit for measuring electric current.

- Description: An ammeter measures current by connecting in series within a circuit, allowing the full current to flow through it.

Resistance:

Resistance measures a material’s opposition to the flow of electric current, indicating how much it impedes electron movement.

- Measurement: Resistance is measured using an ohmmeter.

- Unit: The standard unit for resistance is the ohm (Ω).

- Description: Using Ohm’s Law (V=IR), an ohmmeter determines resistance by applying a small voltage to a component and measuring the resulting current. To prevent damage to the meter or inaccurate readings, it is crucial to measure circuit resistance with the power off.

Power:

Electrical energy is transformed into other forms. Such as heat, light, or mechanical motion, at a rate defined as electrical power. It quantifies the amount of work electricity can perform per unit of time.

- Measurement: Power is measured using a wattmeter.

- Unit: The standard unit for power is the watt (W).

- Description: A wattmeter typically measures both voltage and current simultaneously and then calculates the instantaneous power. The concept of power in AC circuits is more intricate, encompassing real, reactive, and apparent power.

Frequency:

Frequency refers to the number of cycles per second of an alternating current (AC) waveform. It refers to the rapid change in direction that is currently occurring

- Measurement: It’s determined using a frequency counter.

- Unit: The standard unit for frequency is the hertz (Hz).

- Description: A frequency counter measures the period of a waveform and then calculates its reciprocal to determine the frequency. This is vital for understanding the behaviour of AC circuits and systems.

Capacitance:

Capacitance is a property of a capacitor that allows it to store an electric charge. It quantifies how much charge can be stored for a given voltage across the component.

- Capacitance Measurement: Capacitance is determined using a capacitance meter.

- Standard Unit: The farad (F) serves as the official unit of capacitance.

- Operational Principle: A capacitance meter typically operates by applying a known voltage and then measuring the stored charge or by determining the capacitor’s impedance at a specific frequency.

Inductance:

An inductor’s inductance is its ability to store energy in a magnetic field when current passes through it, thereby opposing changes in that current.

- Measurement: Inductance is measured using an inductance meter.

- Unit: The henry serves as the unit of measurement for.

- Description: An inductance meter typically measures the impedance of the inductor at a known frequency to determine its inductance. Inductors play a crucial role in oscillators and power supply systems.

Temperature: A Fundamental Physical Quantity

Temperature is a fundamental physical quantity that expresses the degree of hotness or coldness of a substance or object. It is a measure of the average kinetic energy of the atoms and molecules within a system. The higher the temperature, the more vigorously these particles are moving and vibrating.

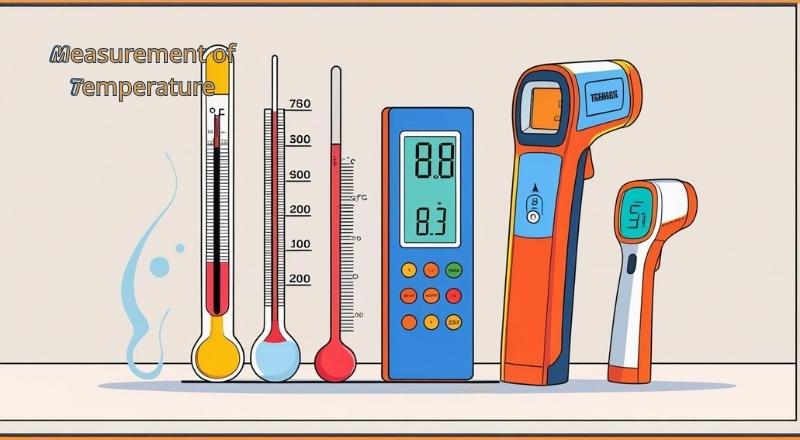

Measurement of Temperature

Temperature is primarily measured using instruments such as:

- Thermometers: These are the most common instruments for measuring temperature. They work on various principles, including:

- Liquid-in-glass thermometers: These utilize the expansion and contraction of a liquid (like mercury or alcohol) within a sealed glass tube.

- Bimetallic strip thermometers: These consist of two different metals bonded together, which bend when heated due to differing rates of thermal expansion.

- Gas thermometers: These measure temperature based on the pressure changes of a fixed volume of gas.

- Pyrometers: These are used for measuring high temperatures, typically in industrial settings. Where direct contact with the object is not feasible or safe. They work by detecting the thermal radiation emitted by an object. Types include:

- Optical pyrometers: These compare the brightness of the hot object with a calibrated light source.

- Radiation pyrometers: These devices measure the total infrared radiation emitted by an object.

Units of Temperature

Temperature is expressed in several different units, each with its specific applications and historical context:

Celsius (°C): The most widely used unit globally for everyday temperature measurements. On the Celsius scale, the freezing point of water is 0°C and the boiling point is 100°C at standard atmospheric pressure.

Fahrenheit (°F): It is predominantly utilized in the United States and a select few other nations. On the Fahrenheit scale, the freezing point of water is 32°F and the boiling point is 212°F.

Kelvin (K): The kelvin is the actual unit of temperature measurement in the International System of Units (SI). The Kelvin scale is an absolute temperature scale, meaning that 0 K (absolute zero) represents the theoretical point at which all molecular motion ceases. There are no degrees associated with Kelvin; it is simply “Kelvin.”

The Kelvin scale is widely used in scientific and engineering applications, including cryogenics, physics, and chemistry. A temperature variation of 1°C is exactly equivalent to a temperature variation of 1 K.

Mechanical Properties:

Pressure: Measured by a pressure gauge or manometer (e.g., in Pascals, PSI, bar).

- Pressure: Pressure is the force applied perpendicularly to a surface divided by the area over which it acts. It is considered a scalar quantity, possessing magnitude without an associated direction. In fluid mechanics, pressure is crucial for understanding the behavior of liquids and gases.

Typically, instruments are used to measure pressure, such as:

- Pressure Gauge: A mechanical or electronic device designed to measure the pressure of a fluid, with common types including Bourdon tube gauges, diaphragm gauges, and bellows gauges.

- Manometer: A U-shaped tube filled with fluid, often mercury or water, serves as a powerful tool for measuring differential or gauge pressure, reminding us of the precision and beauty in understanding the forces that shape our world. The variation in fluid pressure difference.

Common units for expressing pressure include:

- Pascals (Pa): The pascal (Pa), the SI-derived unit of pressure, is defined as one newton per square meter (/m²). Due to its relatively small size, kilopascals (kPa) or megapascals (MPa) are frequently employed when referring to larger pressures.

- Pounds per Square Inch (PSI): A widely used unit in the United States, particularly for tire pressure, water pressure, and industrial applications.

- Bar: A unit of pressure in the metric system, equivalent to precisely 100,000 Pascals. It’s commonly used in meteorology to measure and describe atmospheric pressure.

- Atmosphere (atm): A pressure unit roughly equal to the standard atmospheric pressure at sea level. 1 atm = 101,325 Pa.

- Millimeters of Mercury (mmHg) or Torr: In medical and vacuum applications, specific units are directly based on the height of a mercury column.

Force:

Measured by a force gauge or load cell (e.g., in Newtons). In physics, a force is understood as a fundamental push or pull that leads to the acceleration of an object possessing mass. Understanding force is vital, as it directly impacts the motion of all physical objects. In classical physics, force is defined as a vector quantity, keeping both magnitude and direction. The magnitude is measured in Newtons, the SI unit named after Sir Isaac Newton, whose laws of motion are central to this field. Force is typically measured using instruments like force gauges or load cells

Force Gauge:

A force gauge is a compact, handheld tool designed to measure both push and pull forces. They come in various types, including mechanical (spring-loaded) and digital (electronic). Digital force gauges often provide greater precision, have different measurement modes (peak, continuous), and can store data. They are commonly used in quality control, product testing, and ergonomic assessments across industries like automotive, aerospace, and packaging.

Load Cell:

A load cell is a vital device that efficiently converts force into an electrical signal. An electrical signal. It is a more robust and precise device compared to a typical force gauge, designed for industrial applications where accurate and continuous force measurement is critical. Load cells are often integrated into larger systems like weighing scales, material testing machines, and industrial process control systems. They work on principles like strain gauge technology, where a deformation of the load cell material due to applied force causes a change in electrical resistance, which is then measured and converted into a force reading. Load cells are categorized by their shape (e.g., S-type, canister, beam) and application (e.g., compression, tension, shear).

Understanding and accurately measuring force is crucial in numerous fields, from engineering design (ensuring structural integrity) to sports science (analyzing athletic performance) and even everyday activities like lifting objects.

Distance/Length:

Measured by a ruler, caliper, or laser distance meter (e.g., in meters, inches).

- Distance/Length: Distance is the measurement of the spatial separation between two points or objects. This fundamental physical quantity is crucial across various scientific, engineering, and everyday applications. It can be measured using a variety of instruments, each suited for different scales and precision requirements:

- Ruler: A simple and common tool for measuring shorter distances, typically in centimeters, millimeters, or inches. Rulers are flat, straight-edged instruments with marked increments.

Caliper: A highly accurate tool designed for measuring internal and external dimensions, as well as depths. Various types exist, including:

- Vernier Caliper: Utilizes a main scale and a sliding Vernier scale to achieve higher precision, enabling measurements down to a fraction of a millimeter or inch.

- Digital Caliper: Features a digital display for easy reading of measurements, often with the ability to switch between metric and imperial units.

- Dial Caliper: Incorporates a dial indicator for fine measurements, providing an analog display of the fractional part of the measurement.

- Laser Distance Meter (LDM): Also known as a laser rangefinder, this device uses a laser beam to accurately measure distances, particularly over longer ranges. By measuring how long it takes for a laser pulse to travel to a target and back, we can obtain precise distance measurements. LDMs are commonly used in construction, surveying, and interior design.

Units of measurement for distance and length include, but are not limited to:

Metric System:

- Meter (m): The meter is officially established as the standard unit of length in the International System of Units (SI).

- Kilometer (km): 1,000 meters.

- Centimeter (cm): 0.01 meters.

- Millimeter (mm): 0.001 meters.

- Micrometer (µm): 10^-6 meters.

- Nanometer (nm): 10^-9 meters.

Imperial System:

- Inch (in): Commonly used in the United States and a few other countries.

- Foot (ft): 12 inches.

- Yard (yd): 3 feet.

- Mile (mi): 5,280 feet or 1,760 yards.

The choice of instrument and unit depends on the magnitude of the distance being measured, the required accuracy, and the specific application.

Weight/Mass: Measured by a scale or balance

(e.g., in kilograms, pounds).

Weight/Mass: This essential characteristic determines both the quantity of matter an object contains and the gravitational pull it experiences. It is precisely measured using instruments like a spring scale, which indicates weight by the extension of a spring, or a balance scale, which compares an unknown mass to known masses. Common units of measurement include kilograms (kg) and grams (g) in the metric system, and pounds (lb) and ounces (oz) in the imperial system. For scientific and engineering applications, atomic mass units (amu) are used to describe the mass of atoms and molecules. Understanding weight and mass is crucial in fields ranging from physics and chemistry to engineering and everyday activities like cooking.

Velocity/Speed: Measured by a speedometer or anemometer

(e.g., in meters per second, miles per hour).

Velocity/Speed: These terms describe an object’s speed and its directional movement. Speed is a scalar quantity, indicating only magnitude (e.g., 60 miles per hour), while velocity is a vector quantity, indicating both magnitude and direction (e.g., 60 miles per hour north). Speedometers, often found in vehicles, measure instantaneous speed. While anemometers are used to measure wind speed; both are examples of measurement devices. Units of measurement typically include meters per second (m/s) in the International System of Units (SI) and miles per hour (mph) or kilometers per hour (km/h) in more common contexts. Understanding velocity and speed is vital in transportation, sports, meteorology, and astrophysics.

Flow Rate: Measured by a flow meter

(e.g., in liters per minute, cubic feet per minute).

Flow Rate: This refers to the volume of fluid, whether liquid or gas, that moves past a specific point over a given period. This parameter plays a vital role in various industrial processes, environmental monitoring, and medical applications. Flow meters are specialized devices designed to measure flow rate, utilizing principles such as differential pressure, electromagnetic induction, or ultrasonic waves. Common units of measurement for volume flow rate include liters per minute (L/min) or cubic meters per second (m³/s), while mass flow rate is often expressed in kilograms per second (kg/s). Accurate measurement of flow rate is essential for optimizing processes, ensuring safety, and managing resources efficiently.

Temporal Properties:

Time: Measured by a stopwatch, clock, or timer

(e.g., in seconds, minutes, hours).

Time: This inherent characteristic measures the length of occurrences or periods. It is commonly measured using instruments. Such. as stopwatches for short durations, clocks for everyday timekeeping, and timers for specific countdowns or intervals. The standard units for measuring time include seconds (s), minutes (min), and hours (hr). Understanding time is crucial in various fields, from physics to project management, enabling the sequencing and analysis of processes.

Other Properties:

Light Intensity: Measured by a lux meter (e.g., in lux).

Light Intensity: This property describes the amount of light emitted or reflected from a source. It is measured using a lux meter, which quantifies illuminance in units of lux (lx). Light intensity is vital in fields like photography, architecture (for optimal lighting design), and horticulture (for plant growth).

Sound Level: Measured by a sound level’s meter (e.g., in decibels).

A Sound level’s, also known as sound pressure level, quantifies the intensity of sound waves. Sound readings, usually measured in decibels (dB), are taken with a sound level meter. Decibels are a logarithmic unit, reflecting the wide range of sound intensities that the human ear can perceive. Sound level measurement is crucial in acoustics, environmental noise control, and occupational health and safety.

Humidity: Measured by a hygrometer

(e.g., in percentage relative humidity).

Humidity: water vapor in the air. It’s measured using a hygrometer, and the readings are usually shown as a percentage of relative humidity (RH). High humidity indicates that the air has reached its maximum capacity to hold water at a given temperature. Humidity plays a significant role in weather patterns, comfort levels in indoor environments, and the preservation of sensitive materials.

pH: Measured by a pH meter (e.g., on a pH scale).

pH: pH shows a solution’s acidity or alkalinity. It is quantified using a pH meter, which provides a numerical value on a pH scale ranging from 0 to 14. A pH of 7 predicts objectivity. Under 7: acidic; Over 7: alkaline. pH is a critical parameter in chemistry, biology (e.g., blood pH), environmental science (e.g., water quality), and agriculture (e.g., soil pH).

The evolution of meters has progressed from simple analog devices to highly sophisticated digital instruments, often integrating connectivity for data logging, remote monitoring, and automated control systems. Their accuracy, reliability, and versatility make them indispensable tools in scientific research, industrial processes, commercial applications, and everyday life.

Brief Historical Context of Metering Technology

The evolution of metering technology is a fascinating journey, marked by innovation and increasing precision, mirroring the advancements in science and engineering itself.

Early Mechanical Devices:

The earliest forms of metering were rudimentary mechanical devices. Before the widespread adoption of electricity, these instruments were designed to measure various physical quantities, often in industrial or scientific contexts. Examples might include early forms of andometer to measure distance, or simple mechanisms to quantify fluid flow. These devices relied on gears, levers, and other mechanical components to translate a physical input into a readable output, often displayed on a dial or counter. While not directly related to electrical metering, these early mechanical principles laid the groundwork for the more complex devices that would follow, establishing the fundamental concept of quantifying and displaying a measurable parameter.

Emergence of Electrical Measurement:

The true revolution in metering began with the discovery and subsequent widespread adoption of electricity. As electricity became a viable energy source, the need to measure its consumption and characteristics became paramount. Early electrical meters were still largely mechanical, employing principles like electromagnetic induction to turn a disc or a set of gears. These devices, such as the induction disc meter, were designed to measure kilowatt-hours (kWh) for billing purposes. They were robust but often lacked the precision and instantaneous data capabilities of modern meters. The development of direct current (DC) and alternating current (AC) systems also led to the creation of specialized meters for each, including ammeters for current, voltmeters for voltage, and watmeters for power.

Transition from Mechanical to Electronic Displays:

The latter half of the 20th century witnessed a significant shift from purely mechanical electrical meters to those incorporating electronic components and, crucially, electronic displays. This transition brought several key advantages. Electronic meters offered far greater accuracy and reliability than their mechanical predecessors. They were less prone to wear and tear and could handle a wider range of operating conditions. The move to electronic displays, initially liquid crystal displays (LCDs) or light-emitting diode (LED) displays, provided clearer, more precise readouts. This also opened the door for more advanced functionalities, such as digital data storage, remote reading capabilities (early forms of automatic meter reading), and the ability to display multiple parameters (e.g., voltage, current, power factor) in addition to energy consumption. This leap enabled today’s sophisticated smart meters: advanced electronic devices with integrated communication.

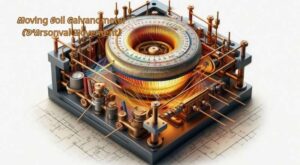

Analog Meters: The Traditional Approach

Fundamental Operating Principles:

- Moving Coil Galvanometer (D’Arsonval Movement): This principle forms the basis of many DC analog meters. It relies on the interaction between a magnetic field and a current-carrying coil.

- Magnetic Field Interaction: A coil, typically wound around a soft iron core, is suspended within a strong permanent magnetic field. The coil generates its magnetic field when current passes through it, and this field then interacts with the permanent magnet’s field. This interaction produces a torque on the coil.

- Spring and Pivot: The coil is attached to a delicate pivot system and connected to a spring. The torque generated by the current attempts to rotate the coil, while the spring provides a restoring force that opposes this rotation.

- Pointer Movement: Increased current boosts coil torque, rotating it until balanced by the spring’s force. A lightweight pointer attached to the coil moves across a calibrated scale, directly indicating the measured value (e.g., current, voltage). The movement of the coil is directly proportional to the current passing through it.

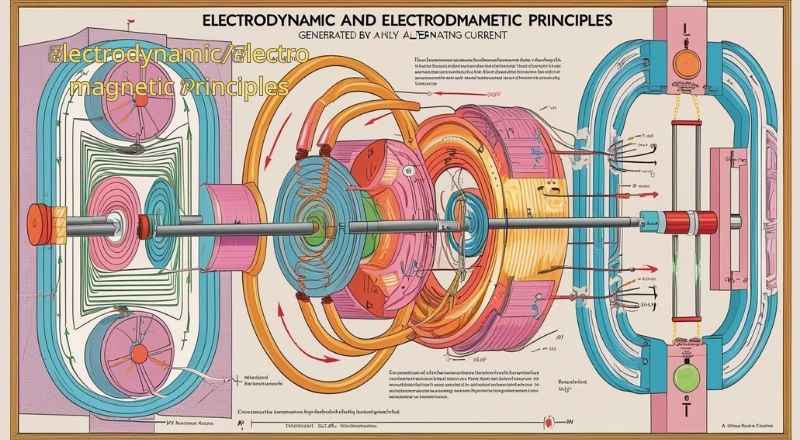

Electrodynamic/Electromagnetic Principles:

These principles are crucial for AC measurements and often involve the interaction of magnetic fields created by currents.

- AC Measurements: Electrodynamic meters are capable of measuring both AC and DC. Unlike the D’Arsonval movement which is primarily designed for DC. They typically use fixed and movable coils, both carrying the current to be measured.

- Iron Vanes: In some electromagnetic meters (e.g., moving iron meters). The interaction occurs between a fixed coil and one or more movable soft iron vanes. When current flows through the coil, it magnetizes the iron vanes, causing them to repel each other. The movable vane’s attached pointer indicates the measured value as a result of this repulsion, causing its movement. The force is proportional to the square of the current, allowing for true RMS measurements of AC.

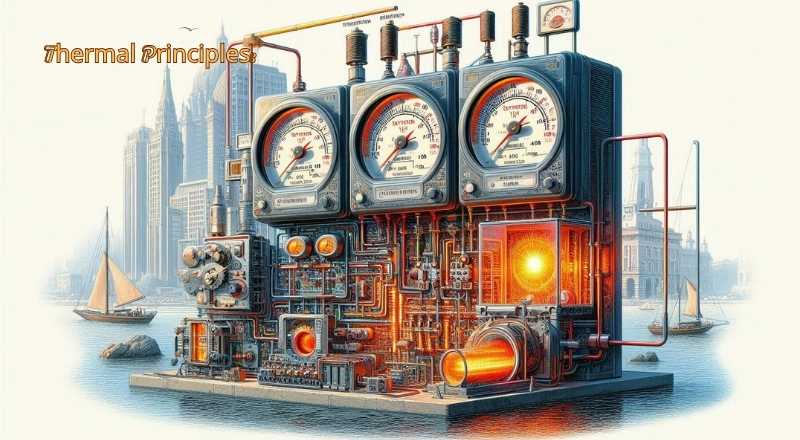

Thermal Principles:

Certain analog meters operate on the principle of heat generated by the current.

- Thermocouples: A thermocouple, formed by two distinct metals at two junctions, generates a voltage proportional to the temperature difference when one junction is heated. This voltage, measured by a sensitive galvanometer, indirectly indicates current or power. These are particularly useful for measuring high-frequency AC currents where other methods might be inaccurate.

- Bimetallic Strips: Two different metals, each possessing a distinct coefficient of thermal expansion, are bonded together to form a bimetallic strip. When heated (e.g., by current flowing through a heating element), the strip bends due to the unequal expansion of the two metals. The extent of the bending is proportional to the temperature rise, and thus to the current. A pointer attached to the bimetallic strip indicates the measured value. This principle is often used in overload protection devices and some older ammeters.

Key Components of an Analog Meter:

Coil: At the heart of most analog meters is a coil of wire, often wound around a core.

A magnetic field is created when an electric current flows through this coil.

- Magnet: An endless magnet is placed close to the coil. The interaction between the magnetic field produced by the coil and the field of the permanent magnet is what causes the meter’s movement.

- Spring: The restoring force is typically provided by a delicate spring, or often a pair of springs. As the coil rotates due to the magnetic interaction. The spring opposes movement, returning the pointer to zero when no current is applied and ensuring a linear response.

- Pointer: Attached to the coil, the pointer is a lightweight needle that moves across the scale to indicate the measured value. Its design is crucial for accuracy and readability, minimizing inertia and ensuring precise readings.

- Scale (graduated): This is the calibrated display marked with units and divisions. Allowing the user to read the measured quantity (e.g., volts, amperes, ohms). The accuracy of the meter heavily relies on the precision and linearity of its scale.

- Damping mechanism (air or fluid): To prevent the pointer from oscillating excessively and settling quickly, a damping mechanism is incorporated. This can be an air-damping system (where a vane moves within a confined air chamber) or a fluid-damping system (less common in general-purpose meters). Damping ensures a stable and readable measurement without prolonged fluctuations.

Advantages of Analog Meters:

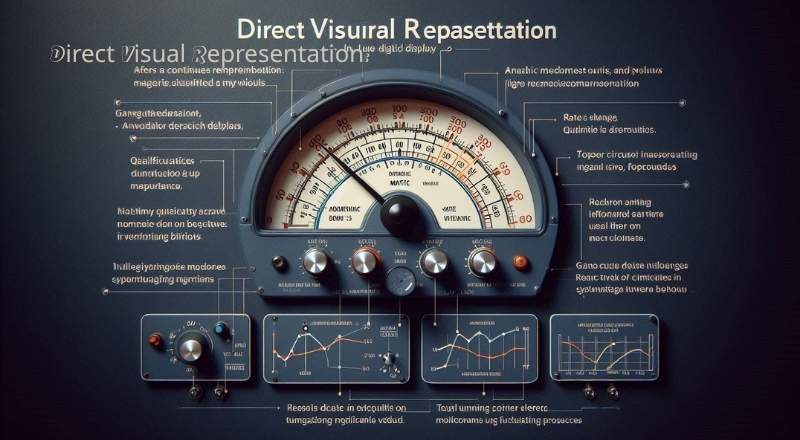

Direct Visual Representation:

Analog meters offer a key advantage: a smooth, continuous visual of the measured quantity. Unlike digital displays, their sweeping pointer intuitively shows trends, rates of change, and relative magnitudes. This is ideal for applications prioritizing real-time dynamics and qualitative assessment over precise numbers, like tuning circuits or monitoring fluctuating processes. The human eye easily perceives subtle pointer shifts, providing a holistic understanding of system behavior.

No Power Required (for passive types):

Many types of analog meters, particularly passive designs like galvanometers or some moving-coil ammeters and voltmeters, do not require an external power source to operate. They draw energy directly from the measured circuit, making them invaluable when power is limited. Where relying on external power could introduce additional risks. Their self-sufficiency contributes to their robustness and suitability for field use in remote or austere environments.

Many types of analog meters, particularly passive designs like galvanometers or some moving-coil ammeters and voltmeters, do not require an external power source to operate. They draw energy directly from the measured circuit, making them invaluable when power is limited. Where relying on external power could introduce additional risks. Their self-sufficiency contributes to their robustness and suitability for field use in remote or austere environments.

Cost-Effectiveness:

Analog meters are typically cheaper to produce than digital meters due to simpler internal circuitry and mechanical design. This makes them a more economical choice for educational, hobbyist, or industrial uses where high precision isn’t paramount, but reliable measurement is still needed. The cost savings can be substantial when equipping multiple workstations or deploying many measurement devices.

Analog meters are typically cheaper to produce than digital meters due to simpler internal circuitry and mechanical design. This makes them a more economical choice for educational, hobbyist, or industrial uses where high precision isn’t paramount, but reliable measurement is still needed. The cost savings can be substantial when equipping multiple workstations or deploying many measurement devices.

Robustness:

Analog meters are robust against electrical noise and transients. Their mechanical components filter rapid fluctuations, making them suitable for noisy industrial environments and power systems where voltage surges or drops are common. Their physical construction also offers resistance to shock and vibration, unlike delicate digital meters.

Visual Stability for Fluctuating Readings:

When measuring quantities that fluctuate rapidly, the inertia of an analog meter’s pointer can be a distinct advantage. Instead of displaying a constantly changing series of numbers that are difficult to interpret, the pointer’s natural dampening effect smooths out minor fluctuations. This provides a more stable and easier-to-read average value, allowing the user to quickly gauge the typical magnitude of the measurement without the mental effort of averaging rapidly changing digital readouts. This characteristic is particularly beneficial in troubleshooting dynamic systems or monitoring processes with inherent variability.

Disadvantages of Analog Meters:

Analog meters, while historically significant and still present in certain applications, come with a range of inherent disadvantages that limit their precision, functionality, and durability compared to their digital counterparts. These drawbacks are crucial to understand when selecting measurement tools for various applications.

Reading Accuracy & Precision:

One of the most significant limitations of analog meters lies in the accuracy and precision of their readings.

Parallax Error:

The angle from which an analog meter’s pointer is viewed can significantly affect the perceived reading. If the viewer’s eye is not directly perpendicular to the meter’s scale, the pointer can appear to be at a different position than its actual reading, leading to inaccurate measurements. This error, caused by human actions, is a frequent source of inaccuracy.

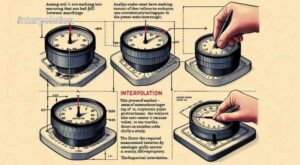

Interpolation:

Analog scales often have markings at specific intervals, requiring the user to estimate values that fall between these markings. This process of interpolation introduces subjectivity and potential for error, as different users might interpret the same pointer position slightly differently. The finer the required measurement, the greater the challenge of accurate interpolation.

Scale Resolution:

The precision of an analog meter is fundamentally limited. By the number and spacing of the markings on its scale. A meter with fewer markings or widely spaced markings will inherently have lower resolution, making it difficult to obtain highly precise readings. This limitation is a physical constraint of the meter’s design.

Limited Functionality:

Analog meters are typically designed to measure one or a few specific parameters, such as voltage, current. They lack the versatility and multi-functionality often found in digital meters, which can frequently measure a wider array of parameters and offer additional features within a single device. This specialized nature can be a disadvantage in applications requiring diverse measurements.

Physical Wear and Tear:

operation of analog meters relies on moving parts, such as coils, magnets, and pointers. These mechanical components are susceptible to friction, wear, and tear over time and with repeated use. This can lead to degradation in performance, calibration shifts, and eventual failure of the meter, requiring more frequent maintenance or replacement compared to solid-state digital devices.

Susceptibility to Mechanical Shock/Vibration:

The delicate moving parts within analog meters are highly sensitive to external mechanical shock or vibration. Even minor impacts or prolonged exposure to vibrations can cause the pointer to become misaligned, affect the internal calibration, or damage the internal mechanisms. This makes them less robust for use in harsh environments.

No Data Logging or Output:

A significant modern disadvantage of analog meters is their inability to easily integrate with digital systems for data storage, analysis, or automated control. They do not typically offer any digital output. Which is inefficient and prone to human error. This lack of connectivity limits their utility in contemporary data-driven environments.

Manual Range Selection:

Analog meters often necessitate manual range selection, increasing user steps and risk of damage from incorrect or exceeding inputs. Digital meters often feature auto-ranging capabilities, simplifying operation and reducing the risk of damage.

Digital Meters: The Modern Evolution

A Digital meters represent a significant leap forward from their analog predecessors, offering enhanced accuracy, functionality, and connectivity in various measurement applications. Driven by microelectronics and digital signal processing, these tools are vital in electronics, engineering, environmental monitoring, and healthcare.

Fundamental Operating Principles: The Digital Transformation

At the heart of every digital meter lies a sophisticated process that converts real-world analog signals into a digital format that can be processed, displayed, and stored. This transformation occurs through several key stages:

Analog-to-Digital Conversion (ADC): Bridging the Analog and Digital Worlds

The initial and most critical step is the conversion of continuous analog signals (like voltage, current, temperature, or pressure) into discrete digital values. This process involves three main phases:

- Sampling: This phase involves taking discrete snapshots of the continuous analog signal at regular intervals. The sampling rate, which controls how frequently snapshots are captured, is crucial. The Nyquist-Shannon sampling theorem states that to accurately reconstruct the original waveform, the sampling rate must be at least twice the highest frequency of the analog signal. Higher sampling rates generally lead to more accurate representations of rapidly changing signals.

- Quantization: After sampling, each analog value is given a specific numerical value from a limited set of options. This process transforms a continuous range of analog signals into a set of specific digital levels. The number of bits in the ADC (e.g., 8-bit, 16-bit, 24-bit) determines the resolution of the quantization. More bits mean more distinct levels, providing finer resolution and reducing quantization error. It’s like breaking a continuous line into a set number of distinct points.

- Encoding: In the final step of ADC, the quantized values are converted into a binary code (a sequence of 0s and 1s) that can be understood and processed by digital circuitry. Binary representation forms the core of all digital data.

Microcontroller/Microprocessor Processing: The Brains of the Operation

After the analog signal is converted to digital data, a microcontroller or microprocessor takes over to perform various computational and control tasks. This digital “brain” is responsible for:

- Calculations: Performing mathematical operations on the digital data, such as scaling, averaging, root mean square (RMS) calculations for AC signals, and unit conversions. For example, a multimeter might calculate power from voltage and current readings.

- Signal Conditioning: Further refining the digital signal to remove noise, filter out unwanted frequencies, or apply compensation for sensor non-linearities. This guarantees the measurement’s reliability.

- Logical Operations: Implementing features like auto-ranging, min/max hold, peak detection, and setting alarms based on predefined thresholds.

- Control: Managing the various components of the digital meter, including the display, input/output ports, and internal memory.

Digital Display Technology: Presenting the Data Clearly

The processed digital information is then presented to the user via a digital display. Unlike analog meters with their physical needles, digital displays offer clear, unambiguous numerical readings. Common display technologies include:

- LCD (Liquid Crystal Display): Known for its low power consumption, LCDs are commonly used in portable digital meters. They work by selectively blocking or transmitting light to create numbers and symbols.

- LED (Light Emitting Diode): LEDs produce their light, offering bright and easily readable displays, especially in low-light conditions. They are often used for individual segments or full-color graphic displays.

- OLED (Organic Light Emitting Diode): OLEDs offer superior contrast, wider viewing angles, and faster response times compared to LCDs, and they can be made flexible. They are increasingly found in high-end digital instruments.

The Vital Elements of a Digital Meter

A digital meter is an integrated system comprising several essential components that work in harmony to achieve accurate and reliable measurements:

Sensor (transducer): This is how we connect with the physical world. A sensor detects physical quantities like temperature, pressure, light, or electrical current and converts them into electrical signals, usually in the form of voltage or current. Examples include thermocouples for temperature, strain gauges for pressure, and shunts for current.

Signal Conditioning Circuit: This circuit prepares the raw electrical signal from the sensor for the ADC. It typically involves amplification to boost weak signals, filtering to remove noise, and linearization to correct for sensor non-linearities, ensuring the signal is within the optimal range for the ADC.

Analog-to-Digital Converter (ADC): As detailed above, this crucial component transforms the conditioned analog signal into a digital format.

Microcontroller/DSP (Digital Signal Processor):

This is the central processing unit responsible for performing calculations, signal processing algorithms, controlling the meter’s functions, and managing data flow. A DSP is specialized for high-speed, repetitive mathematical operations common in signal processing.

Digital Display: The visual output interface, presenting the measured values to the user.

Power Supply: Provides the necessary electrical energy for all components of the digital meter to operate. This can be batteries for portability, or an external AC/DC adapter.

Input/Output ports (for data logging, connectivity): These ports enable the meter to communicate with external devices. Common examples include:

USB: For direct connection to a computer for data transfer, firmware updates, and remote control.

Bluetooth: For wireless short-range communication with smartphones, tablets, or other compatible devices.

Wi-Fi: For wireless connection to local area networks (LANs) and the internet, enabling remote monitoring, cloud data storage, and integration into IoT (Internet of Things) systems.

RS-232/RS-485: Older serial communication standards are still used in industrial and laboratory settings for data exchange.

Advantages of Digital Meters:

The shift towards digital meters has brought about numerous benefits that significantly enhance measurement accuracy, ease of use, and analytical capabilities.

- High Accuracy and Precision: This is arguably the most significant advantage.

- Numeric display eliminates parallax and interpolation errors: Unlike analog meters, where the user must visually estimate the needle’s position between scale markings (leading to parallax errors due to viewing angle) and interpolate values, digital meters provide a direct, unambiguous numerical reading. This eliminates human error and increases measurement reliability.

- Clear, Unambiguous Readings: The direct numerical value is easy to read and interpret, even for untrained users, reducing the chance of misreading.

- Enhanced Functionality: Digital meters come packed with features that analog designs simply can’t match or make practical.

- Multiple measurement ranges (auto-ranging): Digital meters often auto-select the right measurement range, saving you from manually adjusting it and avoiding issues like overload or under-range errors. This makes the operation easier and ensures the meter remains secure.

- Multiple parameters: Digital multimeters, for example, can measure a wide array of electrical parameters beyond just voltage and current, including resistance, capacitance, frequency, duty cycle, and even temperature, making them incredibly versatile tools.

- Min/Max hold, peak detection: These features allow users to capture and display the minimum or maximum values recorded over a period, or detect transient peaks, which are crucial for troubleshooting intermittent problems or analyzing dynamic signals.

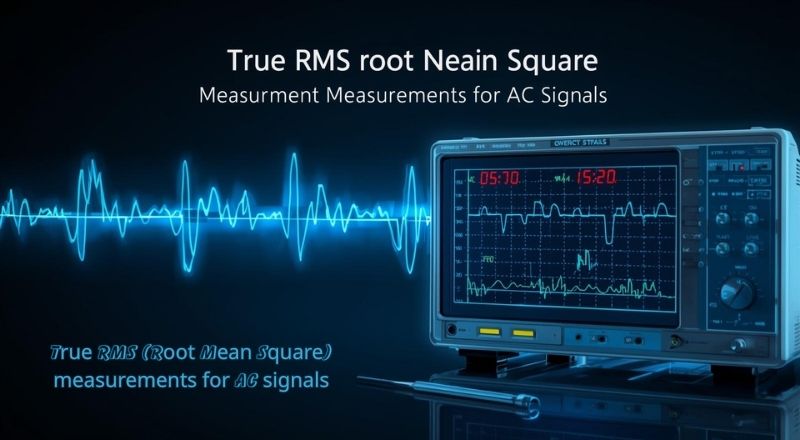

True RMS (Root Mean Square) measurements for AC signals:

- Unlike simpler meters that only measure the average value of an AC signal, True RMS meters provide accurate measurements of the effective heating value for non-sinusoidal AC waveforms, offering a clearer and more precise representation of power. This is critical in modern electrical systems with distorted waveforms from electronic loads.

- Data Logging and Connectivity: This capability transforms a simple measurement tool into a powerful data acquisition system.

- Internal memory: Many digital meters can store a large number of readings internally, allowing for long-term monitoring without constant supervision.

- USB, Bluetooth, Wi-Fi connectivity for data transfer to computers/networks: Seamlessly transfer stored data or stream real-time measurements to external devices, enabling more in-depth analysis.

- Integration with software for analysis and reporting: Specialized software applications can analyze the logged data, generate graphs, create custom reports, and even perform statistical analysis, providing valuable insights into system performance or anomalies. This is crucial for ensuring preventive maintenance, maintaining quality assurance, and advancing research.

- Durability (less mechanical wear): With fewer or no moving mechanical parts (unlike the delicate coil and needle of an analog meter), digital meters are inherently more robust and resistant to physical shock and vibration, leading to a longer lifespan and reduced maintenance.

- Self-Calibration and Diagnostics: Many advanced digital meters incorporate internal self-test routines and calibration functions, allowing them to verify their accuracy or guide the user through calibration procedures. This ensures ongoing measurement reliability and simplifies maintenance.

Disadvantages of Digital Meters:

While digital meters offer significant advantages, they also have certain limitations that users should be aware of.

- Requires Power: Digital meters are active devices and always need a battery or external power source to operate. This is a crucial consideration for remote or long-term monitoring applications where power might be scarce. Analog meters, in contrast, can operate passively without an external power source for basic measurements.

- Cost (for advanced features): While basic digital multimeters are now very affordable, high-end digital meters with extensive features such as True RMS, advanced data logging, high bandwidth, and specialized functions can be much pricier than their analog alternatives.

- “Stair-step” Reading for Rapidly Changing Signals: When measuring rapidly fluctuating signals, the discrete nature of digital sampling and display updates can cause the reading to appear to “jump” between values rather than showing a smooth, continuous trend. This “stair-step” effect can make it harder to visually follow fast-changing dynamics or identify transient events without specific features like trending graphs or high refresh rates. Analog meters, with their continuous needle movement, often provide a better visual representation of trends for rapidly changing signals.

- Susceptibility to Electrical Noise: Digital circuitry, especially the ADC and processor, can be more sensitive to electromagnetic interference (EMI) if not properly shielded. Strong electromagnetic fields can induce noise in the digital signals, potentially.

Accuracy and Precision:

The Cornerstones of Measurement Reliability

In the realm of scientific and industrial measurement, accuracy and precision are paramount. Though frequently used interchangeably, these terms signify separate yet complementary facets of measurement quality.

Resolution vs. Readability:

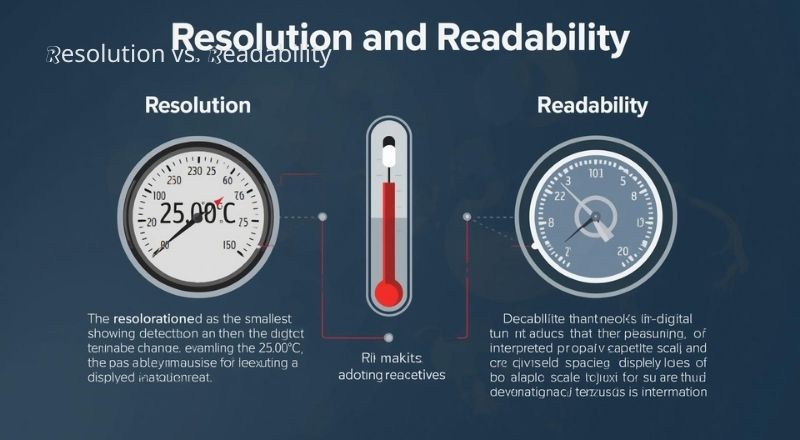

- Resolution describes the smallest change a measuring instrument can detect and display. A digital thermometer with a 0.1°C key can easily discern between tasks like 25.0°C and 25.1°C. Higher resolution generally implies the ability to capture finer details in the measurement.

- Readability refers to the ease of interpreting a measurement from an instrument’s display. High-resolution readings can be hard to read if the display is poorly lit, too small, or cluttered. Analog scales, for instance, often need careful observation to avoid misinterpretations, especially with closely spaced lines.

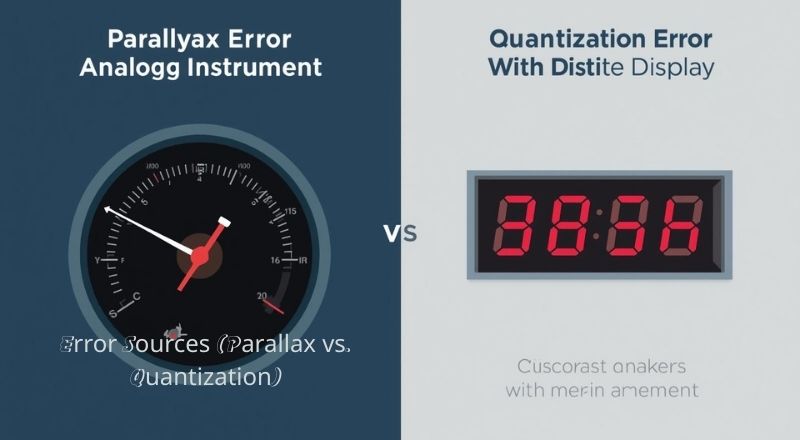

Error Sources (Parallax vs. Quantization):

- Parallax error is a common issue in analog instruments, occurring when the observer’s eye is not directly perpendicular to the measurement scale. This angular misalignment can lead to an apparent shift in the reading, making the measurement appear higher or lower than its actual value. It is particularly prevalent with instruments like rulers, thermometers, and ammeters with needle indicators.

- Quantization error is inherent in digital instruments. Since digital displays can only show discrete values (e.g., 25.0, 25.1, 25.2), any continuous analog input is rounded to the nearest available digital value. This rounding introduces a small, unavoidable error, typically half of the instrument’s resolution. For example, if a thermometer has a resolution of 0.1°C, a true temperature of 25.05°C would be displayed as either 25.0°C or 25.1°C, introducing a quantization error of up to 0.05°C.

Readability and Interpretation: Understanding the Data

Beyond raw numbers, how measurement data is presented significantly impacts its utility and user comprehension.

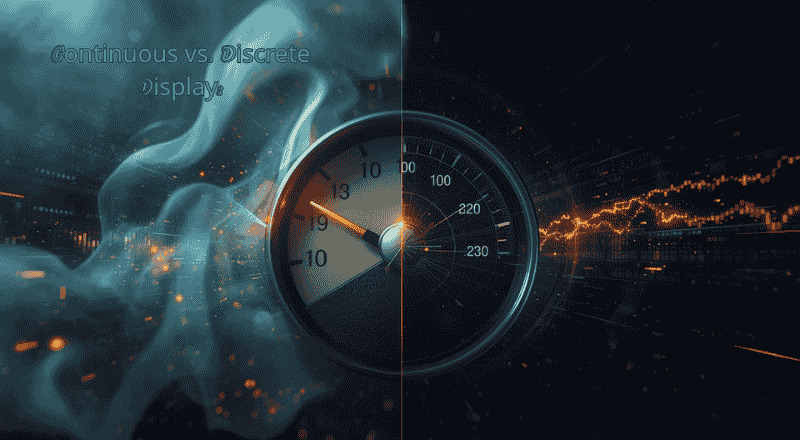

Continuous vs. Discrete Display:

- Continuous displays, typically found in analog instruments with needles or fluid levels, provide a visual representation of the measurement’s progression. They offer a sense of the value fluctuating smoothly over time, which can be beneficial for observing trends or rapid changes. However, precise numerical readings can be challenging to obtain.

- Discrete displays, characteristic of digital instruments, present numerical values at specific points in time. Though precise for single readings, analogue meters don’t immediately show the rate or direction of change without sequential observations.

- Visual Trend Analysis: The ability to easily perceive trends in data is crucial for many applications.

Graphs visualize changes, patterns, and anomalies over time. Modern instruments offer built-in trending or software interfacing for this.

Sequential discrete readings can reveal trends, though it requires more mental effort than a visual plot without dedicated graphing functions.

Functionality and Versatility: Adapting to Diverse Needs

The utility of a measuring instrument is often defined by its functional capabilities and adaptability to various measurement scenarios.

Single vs. Multi-Parameter Measurement:

- Single-parameter instruments are designed to measure only one specific physical quantity, such as temperature, pressure, or voltage. They are often optimized for accuracy and precision in that particular measurement.

- Multi-parameter instruments, also known as multi-meters or all-in-one devices, can measure several different quantities. This versatility is highly beneficial when monitoring multiple variables simultaneously, or when space and budget are limited. Examples include environmental monitors measuring temperature, humidity, and atmospheric pressure, or electrical multi-meters measuring voltage, current, and resistance.

- Advanced Features (Auto-ranging, Data Logging, Statistical Analysis): Modern instruments often incorporate sophisticated features that enhance their usability and data handling capabilities.

- Auto-ranging automatically adjusts the instrument’s measurement range to provide the most accurate reading, eliminating the need for manual range selection by the user. This prevents overflow errors and ensures optimal resolution.

- Data logging lets the device capture measurements over time, usually at intervals chosen by the user. This is invaluable for long-term monitoring, unattended measurements, and capturing transient events. Logged data can often be downloaded for further analysis.

- Statistical analysis features enable the instrument to perform real-time calculations on the measured data, such as computing averages, standard deviations, maximums, and minimums. This supplies quick insights into the data’s features and helps in comprehending variability and trends.

Power Requirements: Sustaining Operation

The power source and consumption characteristics are critical considerations, particularly for portable or remote applications.

Passive vs. Active Operation:

Passive instruments do not require an external power source for their operation. They typically use a physical phenomenon to generate a reading (e.g., a liquid-in-glass thermometer). While simple and reliable, their capabilities are often limited.

Active instruments need an external power source, which can come from batteries, AC mains, or a dedicated power adapter. This power operates electronic components, enabling complex functions and higher precision.

Battery Life Considerations: For battery-powered instruments, battery life is a crucial factor.

Power consumption varies significantly depending on the instrument’s complexity, display type (e.g., backlit LCDs consume more power), and the use of advanced features like data logging or wireless communication.

Battery type (e.g., alkaline, NiMH, Li-ion) and capacity directly influence operating duration.

Power management features, such as auto-power off, low-power modes, and efficient circuit design, are essential for extending battery life and ensuring reliable operation in the field.

Durability and Environmental Factors: Standing Strong Against the Elements

The robustness of a measuring instrument against environmental stressors is vital for its longevity and reliable performance in diverse operating conditions.

Mechanical vs. Electronic Robustness:

- Mechanical robustness refers to the instrument’s ability to withstand physical impacts, drops, vibrations, and general wear and tear. This is particularly important for instruments used in industrial settings, construction sites, or field operations.

- Electronic robustness pertains to the instrument’s resilience against electromagnetic interference (EMI), electrostatic discharge (ESD), power surges, and internal circuit failures. Well-designed instruments incorporate shielding, filtering, and protective circuitry to safeguard their sensitive electronic components.

Temperature, Humidity, Vibration Sensitivity: Instruments are often designed with specific operating ranges for various environmental parameters.

- Temperature: Extreme temperatures (both hot and cold) can affect the accuracy, stability, and lifespan of electronic components and sensors. Operating outside the specified temperature range can lead to drift in readings or even permanent damage.

- Humidity: High humidity can lead to condensation, which may cause short circuits in electronics or promote corrosion. Conversely, low humidity increases the likelihood of electrostatic discharge (ESD). Instruments designed for humid environments often feature sealed enclosures or conformal coatings on their circuit boards.

- Vibration: Constant or sudden vibrations can loosen connections, damage internal components, and affect the accuracy of sensitive sensors. Instruments intended for use in environments with significant vibration (e.g., near machinery or in vehicles) are typically designed with robust casings and shock-absorbing mounts.

Cost-Effectiveness: Balancing Performance and Expenditure

The total cost associated with a measuring instrument extends beyond its initial purchase price.

Initial Purchase Price: This is the upfront cost of acquiring the instrument. It can vary widely based on the instrument’s features, accuracy, brand reputation, and specialized functionalities.

Maintenance and Calibration Costs: These ongoing costs are crucial for ensuring the instrument’s long-term accuracy and reliability.

Maintenance includes routine cleaning, battery replacements, and handling small repairs.

Calibration makes sure an instrument provides accurate readings by aligning it with a reliable standard. Regular calibration, often mandated by quality standards or regulatory bodies, can be a significant recurring expense, especially for highly precise instruments. How often you need to calibrate depends on how the instrument is used. The environmental conditions, and what the manufacturer recommends.

Data Management and Integration: Leveraging Information

How measurement data is handled and integrated into broader systems. It’s increasingly important for efficiency and comprehensive analysis.

Manual Recording vs. Automated Data Acquisition:

- Manual recording involves physically writing down readings from the instrument. While simple and low-cost for infrequent measurements, it is prone to human error, time-consuming, and unsuitable for high-volume data collection or continuous monitoring.

- Automated data acquisition systems use digital interfaces to directly capture data from the instrument, eliminating manual transcription. This method is highly efficient, reduces errors, and allows for real-time monitoring and analysis. It is essential for processes requiring large datasets, high-speed measurements, or remote monitoring.

Interfacing with Other Systems (PLCs, Computers): The ability of an instrument to communicate with other control systems.